I suspect this is why they asked about possibility to increas timeout or configure reconnect parameters in duplicacy. Recreating webdav connection on backup threads might also help. I will clarify with OpenDrive support.īecause of above timeout value might be still useful during busy hours. So I suspect it is related with server load/uaser activity. Was 1.7 MiB around 20:00 MST with much more 500/504 errors. I restarted duplicacy around 3:30 MST and speed is 6.5 MiB/sec so far. Same is true for probablity of 500/504 errors. During the late night/early morning/working hours the up speed is better. My experience is that during peak hours in the USA the upload speed drops to 1/3 or worse. I dont want to spend huge amount of money on this. For me it is just an additional safety layer.

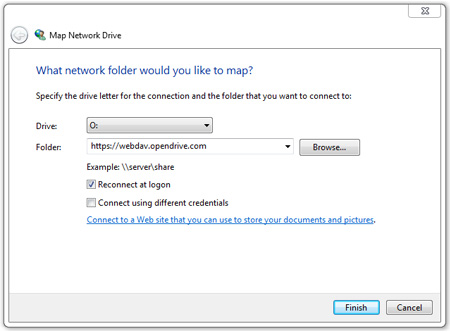

I have been using cloud backups for 4 years, no incidents so far. So I have tried many options offering 10TB on a reasonable price. I also tried but their swift api is not compatible with duplicacy backend (or duplicacy is not compatible with that - I was not able to set up it working). Previously I used HubiC (still have account for 10 TB), but up/down speed is worse (1/10) with duplicacy compared to OpenDrive. Developers can also access OpenDrive through WebDAV and the available API. WordPress plugins for cloud storage are rare, and OpenDrive is one of the few cloud storage solutions that have this option. I checked other options too: I was able to reach 32 MiB/sec upload speed with duplicacy on Google Drive, but they have no reasonable plan for 4-6-8-10 TBs of data. A few aspects make it special, like the ability to use OpenDrive on WordPress through its dedicated WordPress plugin. OpenDrive “unlimited” plan for personal use offers 10TB storage without any official limitations, 20TB for business users on the same price as Google Drive 2TB storage. running duplicacy on QNAP intel 圆4 arch linux Would that be more reliable? I might have more control over webdav parameters. Is connection recreated periodically? Or on each upload? Or only once for each thread?Īlso I was thinking of mounting webdav as local fuse fs and do a local to local backup instead of local to webdav. I am also wondering how connections handled in case of multiple threads. Is it possible to set parameters for duplicacy webdav backend? I duplicacy checked docs and found nothing related. I contacted OpenDrive support too, they asked if there is a possibility to fine-tune webdav parameters? e.g. Restarting inclomplete backup works (takes few minutes to continue), but after several hours of activity the process fails with similar errors. chunk size (min/max is default /4, *4), encrypted. I would like to upload 3.9TB data, ~400000 files 10 threads, 32MB avg. End of inner exception stack trace -Īt +AsyncWrapper.GetResponseOrStream () in :0Īt .GetResponse () in :0Īt ( req, System.Object requestdata) in :0Īt .List () in :0Īt .FilelistProcessor.RemoteListAnalysis ( backend, options, .LocalDatabase database, IBackendWriter log, System.String protectedfile) in :0Īt .FilelistProcessor.VerifyRemoteList ( backend, options, .LocalDatabase database, IBackendWriter log, System.String protectedfile) in :0Īt .BackupHandler.PreBackupVerify ( backend, System.I am using opendrive with webdav backend (DUPLICACY_STORAGE=“webdav://*********** backup works well for a while but getting slower and slower (9MiB/sec -> 3MiB/sec) and eventually fails with several errors like this (and duplicacy process stops): If the problem persists, try generating a new authid token from: -> : GetResponse timed out -> : Aborted.Īt (IAsyncResult asyncResult) in :0Īt +AsyncWrapper.OnAsync (IAsyncResult r) in :0

89 (Official Build) (64-bit)ĭoes Duplicati store logfiles somewhere? Couldn’t find anything in /var/log nor in ~/.config/Duplicatiĭ: Failed to authorize using the OAuth service: GetResponse timed out. I’m using version: Duplicati - 2.0.2.1_beta_īrowser: Chrome Version.

0 kommentar(er)

0 kommentar(er)